Projects in SenPAI

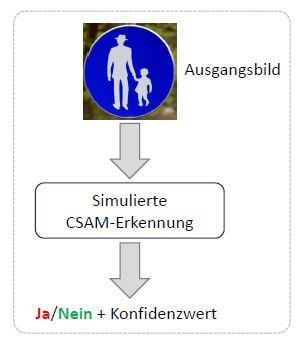

DeCNeC: Detecting CSAM Without the Need for CSAM Training Data

The detection of child sexual abuse material (CSAM) presents a significant challenge for law enforcement agencies worldwide. While known CSAM, such as material seized during investigations, can be identified using hash-matching techniques (e.g., perceptual hashes like PhotoDNA), the detection of unknown CSAM requires advanced methods - particularly those based on artificial intelligence (AI). However, the use of CSAM training data in AI is highly problematic due to its severely illegal nature (see StGB §184b+c), especially for research institutions.

This is where the DeCNeC project comes in, focusing on the detection of unknown CSAM without relying on CSAM data. The project explores and develops innovative approaches in the field of computer vision. The core idea involves combining separate concepts to enable CSAM detection. Specifically, classifiers are trained separately on adult pornographic scenes and on harmless images of children in everyday situations. These classifiers are then fused to identify potential overlaps (and thus CSAM). Additionally, DeCNeC is focusing on research on image enhancement techniques to effectively work with low-quality images that may arise in practice. In parallel, the project develops solutions to address challenges such as problematic pose representations (e.g., as defined by the COPINE scale).

In close collaboration with law enforcement agencies, DeCNeC aims to develop ethical and practical detection methods that can support investigative work with precision and efficiency.

FROST + ML: Forensic and OSINT Technology with Machine Learning

The "FROST+ML" project is investigating how machine learning (ML) can support digital forensics. It addresses problems and challenges found in common methods used in “local” digital forensics and in Open Source Intelligence (OSINT). The aim is to develop and evaluate a framework that facilitates the application of ML technologies to research questions in digital forensics. This should result in new or improved solutions in this area.

LAVA: LLM-Aided and Affected Authorship Verification/Attribution

Die Entwicklung von Large Language Models (LLMs) hat die Erstellung digitaler Texte verändert und neue Herausforderungen für die Feststellung der Autorenschaft von Texten mit sich gebracht.

Das Projekt LAVA konzentriert sich auf zwei zentrale Anwendungsfälle der digitalen Textforensik: die Autorschaftsattribution (AA) und die Autorschaftsverifikation (AV). AA beschäftigt sich mit der Frage, welche Person einen gegebenen anonymen Text verfasst hat, während AV klärt, ob zwei vorliegende Texte von derselben Person stammen. Angesichts des stetig steigenden Anteils an LLM-generierten Texten wurden vier zentrale Forschungsziele identifiziert, die im Rahmen von LAVA näher untersucht werden sollen:

- Autorschaftsattribution in Bezug auf von Menschen verfasste Texte unter Verwendung von LLMs zur Extraktion stilistischer Merkmale

- Autorschaftsverifikation in Bezug auf von Menschen verfasste Texte unter Verwendung von LLMs zur Extraktion stilistischer Merkmale

- Autorschaftsattribution im Kontext von LLM-generierten versus von Menschen geschriebenen Texten, sowie die Bestimmung des eingesetzten LLMs

- Attribution von gemeinsam durch Menschen und LLMs geschriebenen bzw. generierten Texten

Das Ziel der in LAVA durchgeführten Forschung ist es, die Identifizierung und Verifikation der tatsächlichen Autorenschaft zu unterstützen, da die Grenzen zwischen menschlich und maschinell erstellten Inhalten immer mehr verschwimmen.

RoMa: Robustness in Machine Learning

RoMa aims to provide mechanisms to improve the security of ML in the application projects. Roma will interact with other technology projects regarding attack and solution models. The objective of RoMa is to increase the robustness of neural networks and other ML algorithms against attacks altering input data during testing phase either to evade correct classification or to enforce a wanted classification.

SecLLM: Security in Large Language Models

This project aims to analyze the security threats in large language models (LLMs) and propose defense mechanisms against them. Currently, the possible vulnerabilities that these models may face remain unknown. Furthermore, even for the identified vulnerabilities, the most effective defense strategies are yet to be determined. We aim to conduct a taxonomy analysis of the current attacks and investigate new potential attacks. In particular, we focus on prompt injection, backdoors, and privacy leaks. For example, it has been shown that it is possible to hijack the behavior of an LLM by introducing hidden prompts through cross-site scripting and, with this, conduct phishing attacks. Due to the exponential adoption of LLMs in commercial applications, these applications could be vulnerable to the security threats of LLMs. Thus, providing security guarantees to ensure trust and safety in these models is of utmost importance.

Protecting Privacy and Sensitive Information in Texts

The goal of this project is to explore Natural Language Processing methods that can dynamically identify and obfuscate sensitive information in texts, with a focus on implicit attributes, for example, their ethnic background, income range, or personality traits. These methods will help to preserve the privacy of all individuals - both authors as well as other persons mentioned in the text. Further, we go beyond specific text sources, like social media, and aim to develop robust and highly adaptable methods that can generalize across domains and registers. Our research program encompasses three areas. First, we will extend the theoretical framework of differential privacy to our implicit text obfuscation scenario. The set of research questions includes fundamental privacy questions related to textual datasets.

Second, we will identify to which extent unsupervised pre-training achieves domain-agnostic privatization. Third, the large gap between formal guarantees and meaningful privacy-preservation capabilities is due to a mismatch between the theoretical bounds and existing evaluation techniques based on attacking the systems.

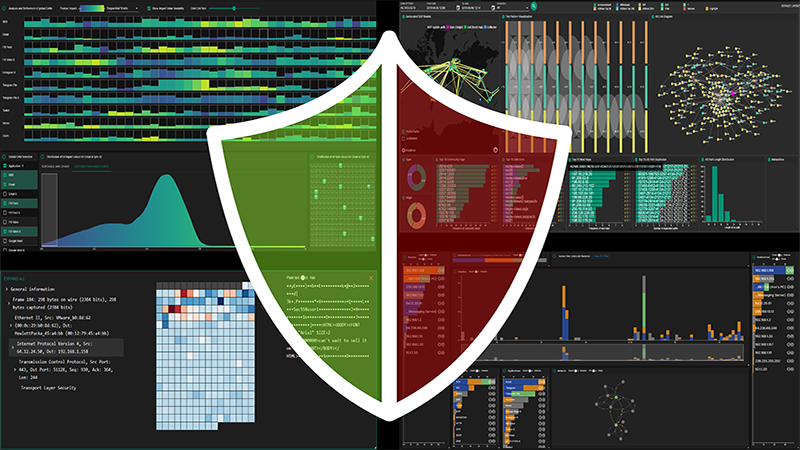

VCAXAI: Interactive Visual Cyber Analytics for Trust and Explainability in Artificial Intelligence for Sensitive Data

This project is concerned with the integration of Machine Learning (ML) and Visual Analytics in the domain of cybersecurity. The focus is on Explainable AI (XAI) and enhancing the comprehension of collected cybersecurity data while improving the transparency and interpretability of ML. The project employs state-of-the-art AI techniques in cybersecurity scenarios with the objective of assisting cybersecurity analysts in comprehending network behavior and identifying threats with greater precision and resilience.

The integration of ML and Visual Analytics (VA) in the project's framework is pivotal in the creation of interactive tools that facilitate analysts' comprehension of not only the data itself but also the AI-driven decisions that support it. The approach is centered on human-centered design and expert involvement at every stage, ensuring that the reliability, interpretability and alignment of the AI models with real-world cybersecurity needs.

VisPer: Visual Forensic Person Verification

Person re-identification is already successfully applied in various contexts, such as biometric access control or real-time surveillance of public spaces. In contrast to these established applications, person re-identification in forensic analysis remains a significant challenge. Relevant personal characteristics are often partially obscured due to occlusions or low image resolution and may also change over time, for example as a result of aging, facial features, hairstyle, body shape, or clothing style.

The VisPer project investigates how modern computer vision methods can be used to re-identify individuals under these and other challenging conditions. Particular emphasis is placed on so-called soft biometric traits, such as tattoos or scars, which remain stable over long periods of time. To effectively support forensic practitioners in casework, person re-identification systems must be adaptable to varying forensic requirements and provide interpretable, transparent decisions.

For this reason, the system developed within VisPer is explicitly designed for collaboration with forensic experts. Matching criteria can be adjusted on a case-by-case basis, and the resulting decisions are explained in a transparent manner, allowing for human verification.

In close cooperation with law enforcement agencies, VisPer will develop new and effective methods for person matching in forensic analysis. Potential use cases include the identification of missing persons as well as the re-identification of victims or suspects.

Finished Projects

Adversarial Attacks on NLP systems

Duration: 01.01.2020 - 31.12.2023

The project focused on a second challenge in ML/KI security in which AI systems are utilized as attackers. The focus here (NLP) was on textual data. The project dealt with hate speech and disinformation, which are relevant scenarios in OSINT applications. Results can also be used in the SePIA project, as OSINT is often based on text data. Scientific findings from the project are:

Robustness and debiasing

- New method called Confidence Regularization, which mitigates known and unknown biases

- Novel debiasing framework that can handle multiple biases at once

Model efficiency

- New adapter architecture called Adaptable Adapters that allows an efficient fine-tuning of language models

- New transformer architecture using Rational Activation Functions

Data collection

- Analyzing sources of bias and making data collection more inclusive by exploring the potential of citizen scientists

Evaluation of generative models (e.g., LLMs)

- Identifying pitfalls and issues when using existing inference heuristics for evaluation

- Developed a novel synthetic data generation framework: FALSESUM

SePIA: SEcurity and Privacy In Automated OSINT

Duration: 01.01.2020 - 31.12.2024

SePIA is an application project addressing various challenges in Automated Open Source Intelligence. Objectives are the encapsulation of the OSINT process in a secure environment following privacy by design, and the application of advanced crawling and information gathering concepts for automation of searching available data sources including utilizing ML for improving the state of the art in crawling. Further, SePIA deals with improving data cleansing by adding a feedback loop to crawling and analysis modules and improving the analysis methods for automated intelligence results based on ML.

XReLeaS: Explainable Reinforcement Learning for Secure Intelligent Systems

Duration: 01.07.2029 - 31.12.2024

This project addresses the important aspects of transparency as well as explainable results and nets in ML. The aim is to build a software toolbox for explainable ML, also increasing other security aspects of the algorithms. A robotic environment is used as an example.